Introduction

Kubernetes is a free and open-source platform for managing containerized workloads and services, as well as for declarative automation and configuration. It is both extensible and portable, with a rapidly expanding ecosystem. It intends to put in place more dependable methods of managing distributed components and services across multiple infrastructures.

In this blog post, we will go over a beginner’s view of the main Kubernetes components, which will be useful for anyone interested in learning the fundamentals of Kubernetes and how Kubernetes components work. We will go over the fundamental Kubernetes structure and components, from what each container consists of to how objects are scheduled and deployed across each worker. To complete and design a solution based on Kubernetes as an orchestrator for containerized applications, it is critical to understand the entire architecture of the Kubernetes cluster.

This blog may appear complicated at first glance, but it is not. I’m confident that by the end of this blog post, you’ll understand how these Kubernetes components interact with one another.

History

Google developed an internal system called ‘borg’ (later named as omega) to deploy and manage thousands google application and services on their cluster. In 2014, google introduced Kubernetes as an open-source platform written in Golang, and later donated to CNCF (cloud Native Computing Foundation).

Kubernetes Architecture Overview

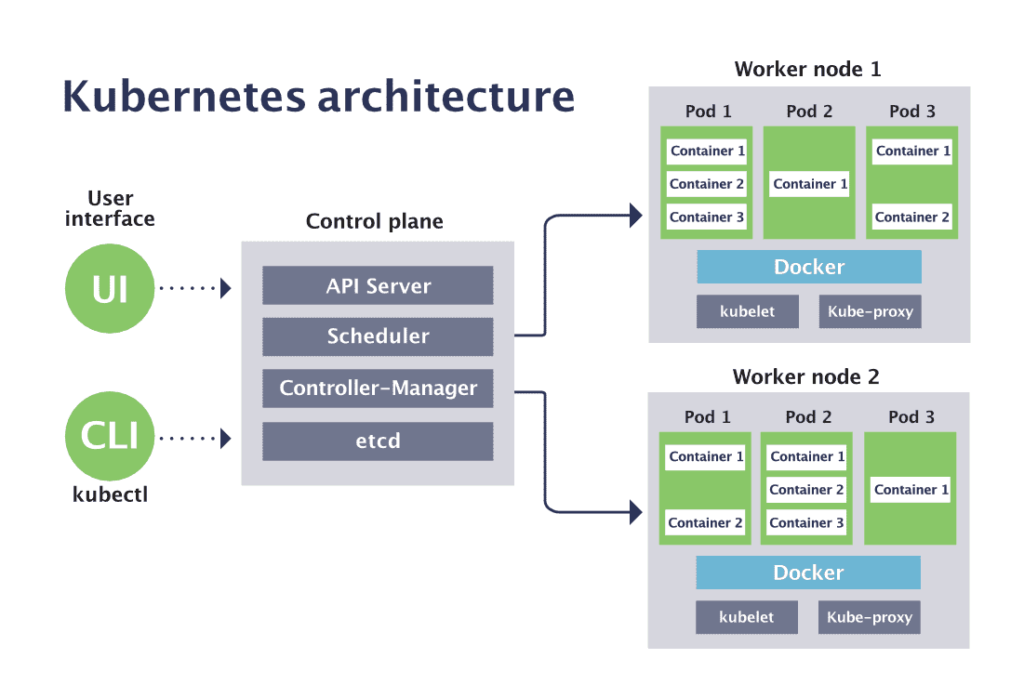

A Kubernetes cluster has two main components—the control plane and data plane, machines used as compute resources.

The control plane hosts the components used to manage the Kubernetes cluster.

Worker nodes can be virtual machines (VMs) or physical machines. A node hosts pods, which run one or more containers.

Let’s dig deeper and understand the major and critical Kubernetes components, which are –

Master Components

- Kube-apiserver

- Kube-scheduler

- Kube-Controller Manager

- Etcd database

Worker/Slave Node Components

- Pods

- Docker Container

- Kubelet

- Kube-proxy

- Kubectl

We will go over each of the above-mentioned Kubernetes components in detail and understand how they are interdependent on one another.

Master Node

The master node manages the Kubernetes cluster, and it is the entry point for all the administrative tasks. You can talk to the master node via the CLI, GUI, or API. For achieving fault tolerance, there can be more than one master node in the cluster. When we have more than one master node, there would be high availability mode, and with one leader performing all the operations. All the other master nodes would be the followers of that leader master node.

Also, to manage the cluster state, Kubernetes uses etcd. All the master nodes connect to etcd, which is a distributed key-value store.

Let me explain to you about all these components one by one.

kube-apiserver

Provides an API that serves as the front end of a Kubernetes control plane. It is responsible for handling external and internal requests—determining whether a request is valid and then processing it. The API can be accessed via the kubectl command-line interface or other tools like kubeadm, and via REST calls.

kube-scheduler

This component is responsible for scheduling pods on specific nodes according to automated workflows and user defined conditions, which can include resource requests, concerns like affinity and taints or tolerations, priority, persistent volumes (PV), and more.

kube-controller-manager

The Kubernetes controller manager is a control loop that monitors and regulates the state of a Kubernetes cluster. It receives information about the current state of the cluster and objects within it, and sends instructions to move the cluster towards the cluster operator’s desired state.

The controller manager is responsible for several controllers that handle various automated activities at the cluster or pod level, including replication controller, namespace controller, service accounts controller, deployment, statefulset, and daemonset.

Etcd database

A key-value database that contains data about your cluster state and configuration. Etcd is fault tolerant and distributed.

Worker Node

A worker node is a virtual or physical server that runs the applications and is controlled by the master node. The pods are scheduled on the worker nodes, which have the necessary tools to run and connect them. Pods are nothing but a collection of containers.

And to access the applications from the external world, you have to connect to the worker nodes and not the master nodes.

Let’s explore the worker node components.

Pods

A pod serves as a single application instance, and is considered the smallest unit in the object model of Kubernetes. Each pod consists of one or more tightly coupled containers, and configurations that govern how containers should run.

Container Runtime Engine

Each node comes with a container runtime engine, which is responsible for running containers. Docker is a popular container runtime engine, but Kubernetes supports other runtimes that are compliant with Open Container Initiative, including CRI-O and rkt.

kubelet

Each node contains a kubelet, which is a small application that can communicate with the Kubernetes control plane. The kubelet is responsible for ensuring that containers specified in pod configuration are running on a specific node, and manages their lifecycle.. It executes the actions commanded by your control plane.

kube-proxy

All compute nodes contain kube-proxy, a network proxy that facilitates Kubernetes networking services. It handles all network communications outside and inside the cluster, forwarding traffic or replying on the packet filtering layer of the operating system.

Container Networking

Container networking enables containers to communicate with hosts or other containers. It is often achieved by using the container networking interface (CNI), which is a joint initiative by Kubernetes, Apache Mesos, Cloud Foundry, Red Hat OpenShift, and others.

CNI offers a standardized, minimal specification for network connectivity in containers. You can use the CNI plugin by passing the kubelet –network-plugin=cni command-line option. The kubelet can then read files from –cni-conf-dir and use the CNI configuration when setting up networking for each pod.

That’all for this post..!!! I hope this helps you to understand Kubernetes architecture in a better way.

Here are some good resources to start journey with K8S.

Below are some references:

Kubernetes Documentation: https://kubernetes.io/docs/home/

Kubernetes Releases: https://kubernetes.io/releases/

Certified Kubernetes Administrator: https://www.cncf.io/certification/cka/

Exam Curriculum (Topics): https://github.com/cncf/curriculum

Candidate Handbook: https://www.cncf.io/certification/candidate-handbook

Exam Tips: http://training.linuxfoundation.org/go//Important-Tips-CKA-CKAD